EU AI Act for Engineering Leaders: Turning High-Risk AI Systems into Testable, Auditable Code

Engineering leaders didn’t ask for another 100-page regulation — but the EU AI Act is here, and high-risk AI systems are now a compliance product you have to ship.

The good news: most of what the EU AI Act demands for high-risk AI systems — risk management, data governance, logging, transparency, human oversight, and robustness — can be turned into code, tests, and repeatable pipelines instead of PDF checklists.

In this guide, we’ll make “EU AI Act for engineering leaders” concrete with 7 practical patterns:

- Guardrails as middleware around AI decisions

- Structured logging to satisfy record-keeping

- CI checks that fail non-compliant builds

- Feature flags and kill switches for high-risk AI

- Human-in-the-loop and explainability patterns

- Evidence bundles you can hand to auditors

- A free scanner workflow to capture web-exposed risk

Recommended read:

Want a practical checklist to turn DORA obligations into real engineering work? Read our Developer Playbook for DORA and get 10 actionable tasks with sample code, logging patterns, and CI/CD resilience tests.

Throughout, we’ll show how Cyber Rely and our partner site Pentest Testing Corp plug in for risk assessment, remediation, and automated vulnerability scans.

1. EU AI Act in engineering terms (30-second mapping)

For high-risk AI systems, the Act expects (simplified):

- Risk Management System – identify, analyze, and mitigate risks over the lifecycle.

- Data & Data Governance – sound datasets, documentation, and data quality.

- Technical Documentation & Record-Keeping – design, training, evaluation, logs.

- Transparency & Information to Deployers – what the system does and its limits.

- Human Oversight – humans can understand, override, and correct the system.

- Accuracy, Robustness, Cybersecurity – resilience against errors and attacks.

For engineering leaders, this translates into three questions:

- Where in the code can we enforce these rules?

- How do we prove it with tests, logs, and CI/CD gates?

- How do we keep evidence fresh for audits and incident reviews?

The rest of this article is a hands-on answer.

2. Pattern: Guardrails as middleware around high-risk AI

Instead of scattering checks across services, wrap every high-risk AI decision in a guardrail middleware that enforces policy before and after the model runs.

Example: TypeScript middleware for a high-risk decision API

// aiGuardrail.ts

import type { Request, Response, NextFunction } from "express";

type RiskPolicy = {

allowedPurposes: string[];

maxConfidenceGap: number;

};

const policy: RiskPolicy = {

allowedPurposes: ["credit_scoring", "loan_pricing"],

maxConfidenceGap: 0.25,

};

export function aiGuardrail(req: Request, res: Response, next: NextFunction) {

const purpose = req.header("x-ai-purpose");

const userId = req.header("x-user-id");

if (!purpose || !userId) {

return res.status(400).json({

error: "Missing required headers for traceability (purpose, user)",

euAiAct: { article: 12, requirement: "record-keeping context" },

});

}

if (!policy.allowedPurposes.includes(purpose)) {

return res.status(403).json({

error: "Purpose not allowed for this high-risk AI system",

euAiAct: { article: 9, requirement: "risk management limitation" },

});

}

// Attach context for downstream logging & human oversight

(req as any).aiContext = {

purpose,

userId,

requestId: crypto.randomUUID(),

receivedAt: new Date().toISOString(),

};

next();

}Wire it into your route:

// routes.ts

import express from "express";

import { aiGuardrail } from "./aiGuardrail";

import { runCreditModel } from "./creditModel";

const router = express.Router();

router.post("/api/high-risk/credit-decision", aiGuardrail, async (req, res) => {

const ctx = (req as any).aiContext;

const input = req.body;

const result = await runCreditModel(input);

// Keep the response structure consistent for audits

return res.json({

decision: result.decision,

confidence: result.confidence,

reasonCodes: result.reasonCodes,

trace: ctx,

});

});

export default router;This gives you:

- Consistent purpose restriction

- Traceability headers (who, what, why)

- A stable JSON shape auditors can sample later

For deeper GenAI guardrails (prompt injection defenses, output allow-listing, retrieval isolation), see the developer-first patterns in “OWASP GenAI Top 10: 10 Proven Dev Fixes” on the Cyber Rely blog and extend them to high-risk workflows.

3. Pattern: EU AI-ready logging with automatic context

The AI Act expects automatically generated logs and record-keeping for high-risk AI systems, especially around decisions and incidents.

Treat this as a logging contract: every high-risk decision emits a structured event you can index, retain, and export.

Example: Python decorator for high-risk AI logging

# ai_logging.py

import logging

import json

from functools import wraps

from datetime import datetime

from typing import Callable, Any, Dict

logger = logging.getLogger("eu_ai_high_risk")

logger.setLevel(logging.INFO)

handler = logging.StreamHandler()

handler.setFormatter(logging.Formatter("%(message)s"))

logger.addHandler(handler)

def log_high_risk_decision(system_id: str):

def decorator(fn: Callable):

@wraps(fn)

def wrapper(*args, **kwargs):

ctx: Dict[str, Any] = kwargs.pop("ai_context", {})

timestamp = datetime.utcnow().isoformat() + "Z"

try:

output = fn(*args, **kwargs)

log_record = {

"ts": timestamp,

"system_id": system_id,

"event": "decision",

"ctx": ctx,

"result": {

"decision": getattr(output, "decision", None),

"confidence": getattr(output, "confidence", None),

"reason_codes": getattr(output, "reason_codes", []),

},

"status": "success",

}

logger.info(json.dumps(log_record))

return output

except Exception as e:

error_record = {

"ts": timestamp,

"system_id": system_id,

"event": "decision_error",

"ctx": ctx,

"error_type": type(e).__name__,

"error_msg": str(e),

}

logger.error(json.dumps(error_record))

raise

return wrapper

return decoratorUse it with your model:

# credit_decision.py

from ai_logging import log_high_risk_decision

class CreditResult:

def __init__(self, decision, confidence, reason_codes):

self.decision = decision

self.confidence = confidence

self.reason_codes = reason_codes

@log_high_risk_decision(system_id="credit_risk_ai_v2")

def run_credit_model(features):

# ... model inference ...

return CreditResult(decision="approve", confidence=0.82,

reason_codes=["LOW_DTI", "STABLE_INCOME"])Feed these logs into your SIEM or data lake with:

- Retention tuned to your risk appetite and sector

- Filters for serious incidents and override events

- Ready exports when an authority or auditor asks for evidence

For broader cyber-logging patterns around supply chain and CI, align this with the “7 Proven Software Supply Chain Security Tactics” playbook.

4. Pattern: CI checks that fail non-compliant AI builds

High-risk AI systems should not ship unless:

- Required tests pass (fairness, robustness, regression)

- Documentation is updated

- Risk and control checklists are satisfied

Make that a CI/CD concern, not a spreadsheet.

Example: GitHub Actions workflow for EU AI checks

# .github/workflows/eu-ai-act-compliance.yml

name: EU AI Act Compliance Gate

on:

push:

branches: [ main ]

pull_request:

jobs:

eu-ai-act-gate:

runs-on: ubuntu-latest

steps:

- name: Checkout

uses: actions/checkout@v4

- name: Setup Python

uses: actions/setup-python@v5

with:

python-version: '3.12'

- name: Install deps

run: pip install -r requirements.txt

- name: Run model tests (accuracy & robustness)

run: |

pytest tests/model --maxfail=1 --disable-warnings

- name: Run fairness / bias checks

run: |

python tools/check_fairness.py --config conf/eu_ai_fairness.yml

- name: Validate documentation & risk register

run: |

python tools/check_eu_ai_docs.py \

--system-id credit_risk_ai_v2 \

--require-docs conf/required_docs.yml

- name: Fail if any required control missing

run: |

python tools/check_controls.py \

--controls conf/controls_eu_ai.ymlInside check_controls.py, treat controls as policy-as-code (e.g., YAML with “must”, “should” flags). This is very similar to the CI gates Cyber Rely uses for supply-chain policy and KEV-gated builds in “Gate CI with CISA KEV JSON: Ship Safer Builds”.

If a control is broken, the build fails — but you can still support “break-glass” labels for emergencies, logged and time-boxed.

5. Pattern: Feature flags & kill switches for high-risk AI

The AI Act expects corrective actions and the ability to mitigate risk quickly when something goes wrong.

Implement region- and use-case-specific feature flags that can:

- Disable a high-risk AI model in a specific member state

- Force all decisions into manual review mode

- Downgrade from full automation to “recommendation only”

Example: Simple flag-based wrapper (Node.js)

// aiFlags.ts

type AiFlags = {

eu_ai_enabled: boolean;

eu_ai_manual_review_only: boolean;

disable_for_country: string[];

};

const flags: AiFlags = {

eu_ai_enabled: true,

eu_ai_manual_review_only: false,

disable_for_country: ["DE"], // e.g. during an incident

};

export function evaluateAiFlags(countryCode: string) {

if (!flags.eu_ai_enabled) {

return { mode: "disabled", reason: "Global kill switch enabled" };

}

if (flags.disable_for_country.includes(countryCode)) {

return { mode: "disabled", reason: `Disabled for ${countryCode}` };

}

if (flags.eu_ai_manual_review_only) {

return { mode: "manual_review", reason: "Manual review enforced" };

}

return { mode: "full_auto", reason: "All checks passed" };

}Use in your API:

const modeInfo = evaluateAiFlags(req.header("x-country") || "EU");

if (modeInfo.mode === "disabled") {

return res.status(503).json({

error: "AI temporarily disabled",

euAiAct: { requirement: "corrective action in case of risk" },

reason: modeInfo.reason,

});

}

if (modeInfo.mode === "manual_review") {

// Skip AI, queue for human decision

queueManualReview(requestPayload);

return res.json({ status: "queued_for_review" });

}

// Otherwise call the AI model as usualThese flags give you fast incident response without redeploying code.

6. Pattern: Human oversight & explainability as first-class APIs

High-risk AI must have effective human oversight and meaningful explanations.

Treat these not as UI features but as APIs that your back office and tooling can call.

Example: Decision explanation endpoint

// explanation.ts

import express from "express";

import { getDecisionLog } from "./storage";

const router = express.Router();

router.get("/api/high-risk/credit-decision/:id/explanation", async (req, res) => {

const id = req.params.id;

const record = await getDecisionLog(id);

if (!record) {

return res.status(404).json({ error: "Decision not found" });

}

return res.json({

decisionId: id,

decision: record.result.decision,

confidence: record.result.confidence,

reasonCodes: record.result.reason_codes,

inputSummary: record.input_summary,

modelVersion: record.model_version,

timestamp: record.ts,

});

});

export default router;Back-office reviewers can then:

- See reason codes, confidence, and inputs

- Override the decision and log a corrective action

- Attach notes for future audits

This works particularly well when paired with AI security practices from Cyber Rely’s GenAI and REST API articles (e.g., Broken Access Control in RESTful APIs and OWASP GenAI Top 10 fixes) so explanations don’t leak sensitive data.

7. Pattern: Evidence bundles you can hand to auditors

For high-risk AI, you’ll need to show technical documentation, logs, and tests together.

Automate a script that, on demand or on each release, bundles:

- Key design docs (architecture, data flow, DPIAs)

- Control and test results (fairness, robustness, security)

- High-risk AI logs for a chosen sample period

- CI/CD pipeline config (to show gates)

Example: Simple Python evidence pack builder

# build_evidence_pack.py

import os

import tarfile

from datetime import datetime

EVIDENCE_ITEMS = [

"docs/eu-ai/architecture.md",

"docs/eu-ai/risk-register.xlsx",

"reports/tests/model_accuracy.json",

"reports/tests/fairness_report.json",

"reports/security/ai_pentest_summary.pdf",

"logs/eu_ai_high_risk/*.jsonl",

".github/workflows/eu-ai-act-compliance.yml",

]

def build_evidence_pack(system_id: str):

ts = datetime.utcnow().strftime("%Y%m%dT%H%M%SZ")

out_name = f"evidence_{system_id}_{ts}.tar.gz"

with tarfile.open(out_name, "w:gz") as tar:

for pattern in EVIDENCE_ITEMS:

for path in sorted(glob.glob(pattern)):

tar.add(path)

print(f"Evidence pack created: {out_name}")

if __name__ == "__main__":

import glob

build_evidence_pack(system_id="credit_risk_ai_v2")Store these artifacts in a secure bucket with immutable retention and access controls.

8. Use a free external scan to validate your attack surface

High-risk AI systems don’t live in isolation — they’re exposed via web apps, APIs, and admin consoles. A fast way to baseline external risk is to run your domains through the Free Website Vulnerability Scanner provided by Pentest Testing Corp.

Screenshot: Free Website Vulnerability Scanner

In this screenshot, you can see our Free Website Vulnerability Scanner at work: a simple URL field and “Scan Now” button that triggers checks for HTTP security headers, exposed files, and common web misconfigurations. This is an ideal “first look” for engineering leaders shipping high-risk AI systems — you can quickly spot missing HSTS, weak cookies, and exposed admin paths before auditors or attackers do.

From there, you can transition to a deeper manual assessment or a full AI-aware pentest.

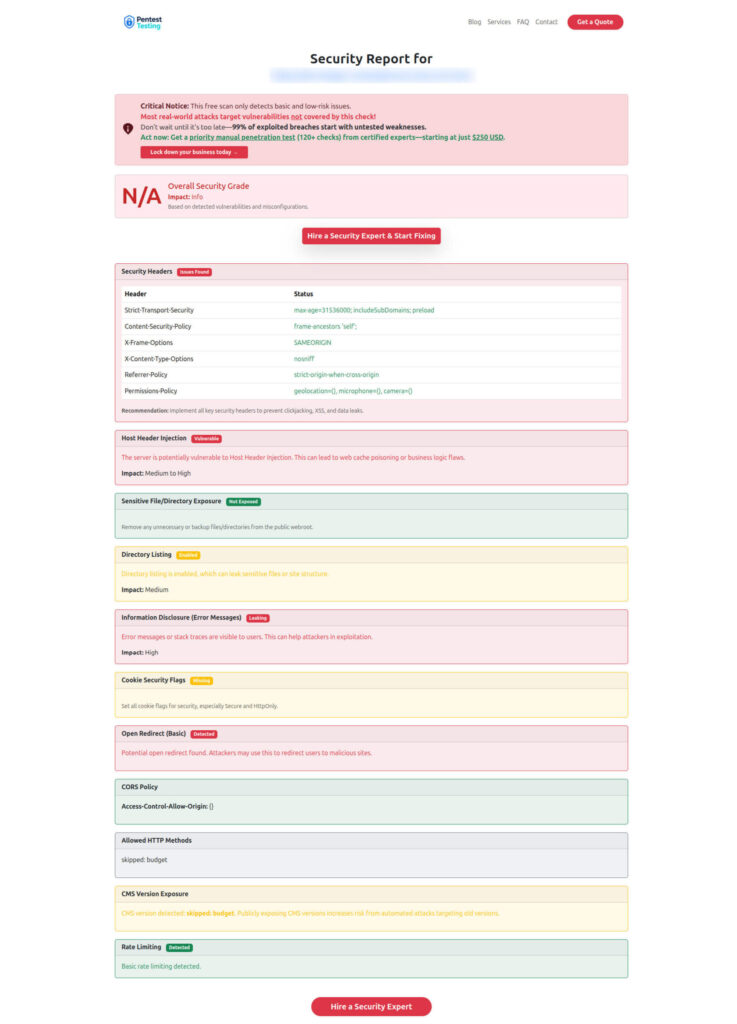

9. Show sample reports as AI Act-ready evidence

The output of the free scan is a report you can attach to your EU AI Act technical documentation and risk register. It demonstrates that you’re actively monitoring the attack surface around your high-risk AI.

Sample Assessment Report to check Website Vulnerability

This sample report from our free scanner highlights HTTP header gaps, exposed paths like /admin/ and .git/, and cookie flag issues. Each finding includes severity, description, and remediation guidance. For EU AI Act compliance, you can attach this kind of report directly to your risk management file and map individual findings to corrective actions, tracked through your remediation backlog.

To go beyond surface-level scans, you can upgrade to manual penetration testing and remediation from Pentest Testing Corp, turning these findings into verified fixes.

10. When to bring in risk assessment and remediation services

At some point, spreadsheets and ad-hoc fixes stop being enough. This is where structured services help.

- Use Risk Assessment Services for HIPAA, PCI DSS, SOC 2, ISO 27001 & GDPR to get a formal, audit-ready view of your environment and how AI systems fit into your compliance posture.

- Link:

https://www.pentesttesting.com/risk-assessment-services/

- Link:

- Use Remediation Services for HIPAA, PCI DSS, SOC 2, ISO 27001 & GDPR when you need help actually implementing encryption, logging, network segmentation, and process changes — not just identifying gaps.

- Link:

https://www.pentesttesting.com/remediation-services/

- Link:

Together with Cyber Rely’s cybersecurity services and web application penetration testing for AI-powered apps, you get a full loop from discovery → fix → evidence.

11. Related Cyber Rely resources to go deeper

If you’re implementing an “EU AI Act for engineering leaders” roadmap, these Cyber Rely posts give you ready-made patterns:

- “OWASP GenAI Top 10: 10 Proven Dev Fixes” – guardrails, prompt-injection defenses, and CI gates for GenAI.

- “7 Proven Software Supply Chain Security Tactics” – SBOM, VEX, and SLSA wiring for dependable build provenance.

- “Gate CI with CISA KEV JSON: Ship Safer Builds” – use KEV as a hard CI gate so exploitable components never hit prod.

- “7 Proven Steps: SLSA 1.1 Implementation in CI/CD” – practical steps to sign builds and emit provenance with minimal friction.

- “Preventing Broken Access Control in RESTful APIs” – essential if your high-risk AI is exposed via APIs.

Treat this EU AI Act article as the governance layer, and those posts as your technical building blocks.

From there, you can iterate toward full compliance, with Cyber Rely as your engineering partner and Pentest Testing Corp providing AI-aware pentests, risk assessments, and remediation support.

🔐 Frequently Asked Questions (FAQs)

Find answers to commonly asked questions about the EU AI Act for Engineering Leaders.