7 Proven Zero Trust Egress Controls for Microservices

Egress is where “internal-only” systems quietly become internet-capable data movers.

In modern microservices, a single compromised workload can:

- Call other internal services you never intended it to reach (east-west)

- Reach external endpoints for data exfiltration (north-south)

- Abuse cloud metadata endpoints, instance credentials, or over-scoped tokens

- Tunnel through “allowed” DNS/HTTPS paths that no one is monitoring

Zero Trust egress is how you reduce blast radius and make “service-to-service security” enforceable—without breaking delivery velocity.

1) Start with an “Egress Contract” (not a firewall rule)

Most teams jump straight to network policy and accidentally break DNS, package installs, or service discovery. Instead, define an egress contract per service:

- Who (workload identity) can call what (service/domain)

- How (mTLS, ports, methods)

- Why (ticket/control mapping)

- Evidence (logs + policy attestations)

Example: a simple egress contract in-repo:

# security/egress-contracts/payments-api.yaml

service: payments-api

identity:

k8s_service_account: payments-api

allowed_internal:

- to_service: orders-api

namespace: prod

ports: [443]

protocol: https

- to_service: ledger-db

namespace: prod

ports: [5432]

protocol: tcp

allowed_external:

- to_fqdn: "api.stripe.com"

ports: [443]

protocol: https

requirements:

mtls: required

authz_policy: required

audit_logs: required

metadata:

owner_team: payments

change_ticket: SEC-4821

review_interval_days: 90This “contract” becomes the source for:

- K8s NetworkPolicy / CNI policy

- Service mesh authZ policy

- CI checks (policy-as-code)

- Runtime detection (baseline vs. allowed)

2) Enforce identity first: mTLS everywhere (mesh or not)

Zero Trust egress works best when the destination can verify who is calling (not just the source IP).

Option A: Service mesh mTLS (recommended)

Example (Istio-style) strict mTLS:

apiVersion: security.istio.io/v1beta1

kind: PeerAuthentication

metadata:

name: default

namespace: prod

spec:

mtls:

mode: STRICTIf you need exceptions (temporary), keep them explicit and time-bound:

apiVersion: security.istio.io/v1beta1

kind: PeerAuthentication

metadata:

name: legacy-exception

namespace: prod

spec:

selector:

matchLabels:

app: legacy-worker

mtls:

mode: PERMISSIVEOption B: App-level mTLS (when you can’t run a mesh)

Go HTTP server that requires client certs and checks identity metadata:

package main

import (

"crypto/tls"

"crypto/x509"

"log"

"net/http"

"os"

)

func main() {

caPEM, _ := os.ReadFile("/etc/tls/ca.pem")

caPool := x509.NewCertPool()

caPool.AppendCertsFromPEM(caPEM)

tlsCfg := &tls.Config{

ClientAuth: tls.RequireAndVerifyClientCert,

ClientCAs: caPool,

MinVersion: tls.VersionTLS12,

VerifyPeerCertificate: func(rawCerts [][]byte, _ [][]*x509.Certificate) error {

cert, err := x509.ParseCertificate(rawCerts[0])

if err != nil { return err }

// Example: enforce a SAN pattern (adjust to your PKI/SPIFFE scheme)

ok := false

for _, uri := range cert.URIs {

if uri.String() == "spiffe://prod/ns/prod/sa/payments-api" {

ok = true

break

}

}

if !ok { return tls.AlertError(46) } // "certificate unknown" style failure

return nil

},

}

srv := &http.Server{

Addr: ":8443",

TLSConfig: tlsCfg,

Handler: http.HandlerFunc(func(w http.ResponseWriter, r *http.Request) {

w.WriteHeader(200); w.Write([]byte("ok"))

}),

}

log.Fatal(srv.ListenAndServeTLS("/etc/tls/server.pem", "/etc/tls/server.key"))

}3) Apply Zero Trust principles to service-to-service egress

A) Deny-by-default egress at the network layer

Kubernetes NetworkPolicy baseline (block everything unless allowed):

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: payments-egress-deny-all

namespace: prod

spec:

podSelector:

matchLabels:

app: payments-api

policyTypes: ["Egress"]

egress: []Then add only required egress. Example: allow DNS to kube-dns and HTTPS to a known internal namespace label:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: payments-egress-allow-dns-and-internal

namespace: prod

spec:

podSelector:

matchLabels:

app: payments-api

policyTypes: ["Egress"]

egress:

- to:

- namespaceSelector:

matchLabels:

kubernetes.io/metadata.name: kube-system

podSelector:

matchLabels:

k8s-app: kube-dns

ports:

- protocol: UDP

port: 53

- protocol: TCP

port: 53

- to:

- namespaceSelector:

matchLabels:

kubernetes.io/metadata.name: prod

podSelector:

matchLabels:

app: orders-api

ports:

- protocol: TCP

port: 443Note: “FQDN allowlists” are CNI-specific (some CNIs support it; native NetworkPolicy doesn’t). If you need domain-based Zero Trust egress, standardize on a CNI that enforces it, and treat that as a platform control.

B) Add identity-aware authorization at L7 (service mesh / gateway)

Example (Istio-style) allow only a specific service account to call a destination:

apiVersion: security.istio.io/v1beta1

kind: AuthorizationPolicy

metadata:

name: orders-allow-only-payments

namespace: prod

spec:

selector:

matchLabels:

app: orders-api

action: ALLOW

rules:

- from:

- source:

principals:

- "cluster.local/ns/prod/sa/payments-api"

to:

- operation:

ports: ["443"]

methods: ["GET","POST"]This is the big leap: network egress controls + identity metadata enforcement.

4) Policy attestations: make egress rules auditable and reviewable

When a breach happens, you want proof that:

- the policy existed,

- was reviewed,

- and was actually enforced at runtime.

A) OPA/Rego policy for “egress contract” enforcement in CI

Example Rego rule: every workload with app label must have an egress contract file:

package egress.guardrails

deny[msg] {

input.kind == "Deployment"

ns := input.metadata.namespace

app := input.spec.template.metadata.labels.app

not contract_exists(ns, app)

msg := sprintf("missing egress contract for %s/%s", [ns, app])

}

contract_exists(ns, app) {

# Your CI loads a data document listing contract files

some i

data.contracts[i].namespace == ns

data.contracts[i].app == app

}In CI, run policy tests against PRs (example GitHub Actions):

name: policy-checks

on: [pull_request]

jobs:

opa:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

- name: Run OPA checks

run: |

opa test policy/ -v

opa eval -i k8s/manifests/deployments.json -d policy/ "data.egress.guardrails.deny"B) Runtime enforcement pipeline (RBAC + ABAC)

Treat enforcement like a pipeline:

- RBAC: who can change policies

- ABAC: what a service can reach based on labels/identity

- Attestation: store policy hashes + approvals

Minimal “policy hash” stamping (store in annotations):

metadata:

annotations:

security.cyberrely.com/egress-contract: "payments-api.yaml"

security.cyberrely.com/policy-sha256: "9f6c...e21a"

security.cyberrely.com/approved-by: "platform-security"5) Build runtime guardrails that don’t break delivery

The practical trick: progressive enforcement.

Phase 1: Observe-only (log every egress)

If you run Envoy/service mesh, standardize egress access logs:

accessLogFormat: |

ts=%START_TIME% src=%DOWNSTREAM_PEER_URI_SAN% dst=%UPSTREAM_CLUSTER%

method=%REQ(:METHOD)% path=%REQ(X-ENVOY-ORIGINAL-PATH?:PATH)%

status=%RESPONSE_CODE% bytes=%BYTES_SENT% trace=%REQ(X-REQUEST-ID)%Phase 2: Soft-block (alert + allow)

Create a “default allow but alert on unknown” baseline job:

# tools/egress_baseline_watch.py

import json, sys

from collections import Counter

ALLOWED = {

("payments-api", "orders-api"),

("payments-api", "ledger-db"),

}

c = Counter()

for line in sys.stdin:

ev = json.loads(line)

pair = (ev["src_service"], ev["dst_service"])

if pair not in ALLOWED:

c[pair] += 1

for pair, n in c.most_common(20):

print(f"ALERT: unexpected egress {pair} count={n}")Phase 3: Hard-block (deny)

Once baselines stabilize, flip to deny-by-default at network + L7.

This is how you keep Zero Trust egress from turning into a productivity tax.

6) Observability for egress: “forensics-ready” by design

If investigations matter, log egress with the same discipline you’d apply to APIs and CI/CD evidence. Cyber Rely’s forensics-ready telemetry and microservices patterns translate directly to egress visibility: consistent fields, correlation IDs, and identity attribution. (Cyber Rely)

Minimum egress event schema (copy/paste)

{

"ts": "2026-02-15T10:12:01Z",

"event": "egress.connect",

"src_service": "payments-api",

"src_namespace": "prod",

"src_identity": "cluster.local/ns/prod/sa/payments-api",

"dst_type": "service",

"dst_name": "orders-api",

"dst_namespace": "prod",

"dst_port": 443,

"protocol": "https",

"decision": "allow",

"policy_id": "orders-allow-only-payments",

"trace_id": "b2f3...",

"request_id": "7a1d..."

}OpenTelemetry: attach identity + destination attributes

# example: add semantic-ish attributes to spans

span.set_attribute("egress.dst.service", "orders-api")

span.set_attribute("egress.dst.namespace", "prod")

span.set_attribute("egress.protocol", "https")

span.set_attribute("egress.policy_id", "orders-allow-only-payments")

span.set_attribute("src.identity", "cluster.local/ns/prod/sa/payments-api")7) Response playbook: when egress anomalies occur

When your detections fire, speed matters. Use a tight, engineering-friendly playbook:

A) Triage (10–20 minutes)

- Identify source identity (service account / workload)

- Identify destination (service/domain/IP)

- Confirm whether it violates the egress contract

B) Contain (fastest safe block)

Block by identity at L7 if possible (service mesh), otherwise block at egress gateway/CNI.

Example: quarantine a compromised workload via label + NetworkPolicy:

kubectl label pod -n prod -l app=payments-api quarantine=true --overwriteapiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: quarantine-deny-egress

namespace: prod

spec:

podSelector:

matchLabels:

quarantine: "true"

policyTypes: ["Egress"]

egress: []C) Rotate + revoke (stop token reuse)

- Rotate service credentials/certs

- Revoke overly-broad tokens

- Re-issue least-privileged identity bindings

For deeper incident work (timeline + scope + containment validation), route to DFIR.

👉 If you need help validating impact and building a defensible timeline, see: Digital Forensic Analysis Services (DFIR).

Engineering integration checklist (CI/CD, K8s, gateways)

Use this as a delivery-safe rollout list:

- Each service has an egress contract file in-repo

- Deny-by-default egress at the cluster layer (namespace/app scoped)

- mTLS enforced (mesh strict, or app-level cert verification)

- L7 authZ policies reference service identity, not IPs

- Policy-as-code runs in CI (OPA/Gatekeeper/Kyverno style)

- Every egress decision is logged with identity + policy ID + trace/request IDs

- “Observe-only → soft-block → hard-block” rollout plan exists per namespace

- Break-glass procedure is time-bound and auditable

- Response playbook tested (tabletop + chaos-style drills)

If you want a fast, practical assessment of where your architecture is over-exposed (including service-to-service paths, egress weak points, and governance gaps), start here:

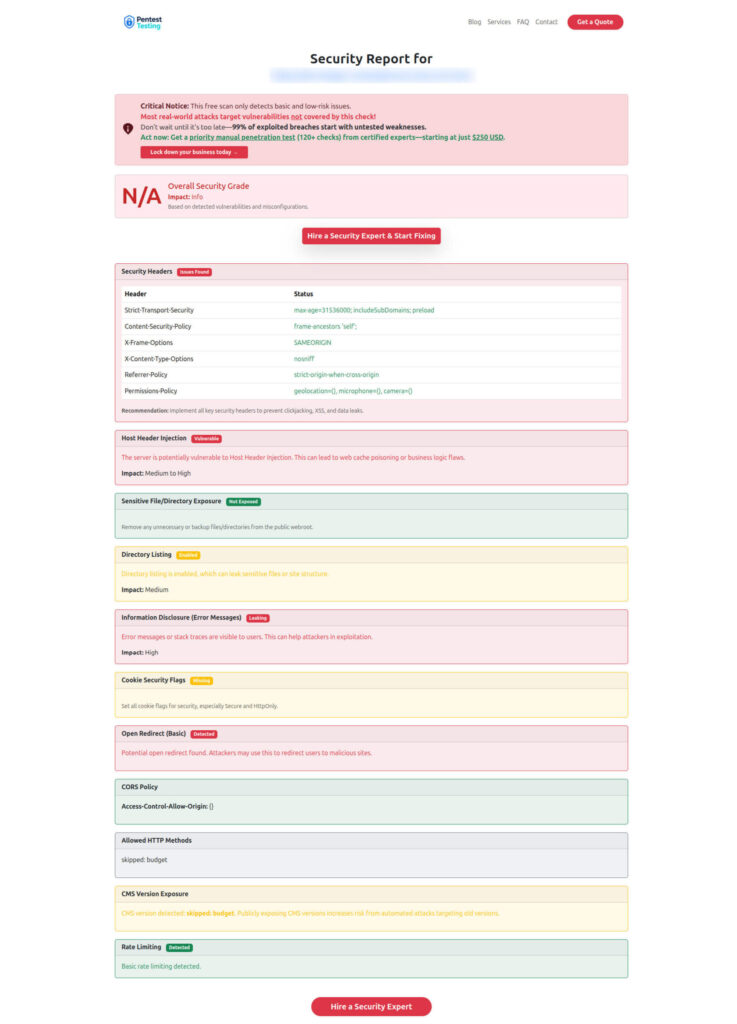

Free Website Vulnerability Scanner by (Pentest Testing Corp)

Sample report to check Website Vulnerability (from the tool)

Related reading on Cyber Rely (recent posts)

- 7 Powerful Forensics-Ready Telemetry Patterns

- 7 Proven Patterns for Forensics-Ready Microservices

- 7 Proven Forensics-Ready CI/CD Pipeline Steps

- 9 Battle-Tested Non-Human Identity Security Controls

- 7 Powerful Kev-To-Deploy Steps in 24–72h

🔐 Frequently Asked Questions (FAQs)

Find answers to commonly asked questions about Zero Trust Egress Controls for Microservices.