7 Proven Patterns for Forensics-Ready Microservices

If you’ve ever tried to investigate an incident in a microservices stack, you already know the pain: logs scattered across services, missing request IDs, inconsistent event formats, and “helpful” debug lines that omit the one thing you need—who did what, when, from where, and what changed.

Forensics-ready microservices flip that story. They’re designed so your engineering and security teams can reconstruct timelines fast, confidently scope impact, and answer leadership questions with evidence—not guesswork.

This guide is implementation-heavy. You’ll leave with copy/paste patterns for:

- structured + correlated logging

- request tracing and context propagation

- immutable audit trails (append-only + tamper resistance)

- CI/CD provenance + artifact capture

- hardening choices that make investigations efficient instead of reactive

Want more engineering-first security like this? Browse the latest posts on the Cyber Rely blog: https://www.cybersrely.com/blog/

What “forensics-ready microservices” actually means

Forensics-ready microservices produce investigation-friendly telemetry by default:

- Consistent, structured logs (not freestyle strings)

- Correlation everywhere (request_id + trace_id across hops)

- High-value audit events (auth, access, export, admin actions, data changes)

- Immutability / trust (append-only + retention + tamper resistance)

- Provenance (what code/build ran, who shipped it, what artifacts existed)

If your system can’t answer these quickly, it’s not forensics-ready:

- Which user/session/API key triggered the first suspicious action?

- Which services were touched downstream (and in what order)?

- What resources were accessed/changed (before/after)?

- What build version was deployed at that time?

- What evidence do we have that logs weren’t altered?

Pattern 1) Adopt a canonical security event schema (don’t freestyle)

The fastest way to lose days in incident response is to let every service log a different “shape.”

Start with a canonical audit/security event and version it. Your goal: any engineer can grep/query across services and get consistent fields.

Canonical audit event (JSON)

{

"schema": "audit.v1",

"ts": "2026-02-03T10:15:23.412Z",

"service": "orders-api",

"env": "prod",

"event": "order.export",

"severity": "info",

"result": "success",

"actor": {

"type": "user",

"id": "u_12345",

"role": "admin",

"ip": "203.0.113.10",

"ua": "Mozilla/5.0",

"mfa": true

},

"target": {

"type": "order",

"id": "ord_8891",

"tenant_id": "t_77"

},

"request": {

"request_id": "req_01HR9K9YQ1K8PZ1WJ1Q8N2V7A1",

"trace_id": "4bf92f3577b34da6a3ce929d0e0e4736",

"span_id": "00f067aa0ba902b7",

"method": "GET",

"path": "/v1/orders/export",

"status": 200

},

"change": {

"fields": ["exported_at"],

"before": null,

"after": "2026-02-03T10:15:23.000Z"

},

"labels": {

"region": "ap-south-1",

"build_sha": "9c1a2f1",

"release": "[email protected]"

}

}Minimal JSON Schema (versioned)

{

"$id": "audit.v1.schema.json",

"type": "object",

"required": ["schema","ts","service","event","result","request","actor"],

"properties": {

"schema": { "const": "audit.v1" },

"ts": { "type": "string" },

"service": { "type": "string" },

"event": { "type": "string" },

"result": { "enum": ["success","fail","deny"] },

"request": {

"type": "object",

"required": ["request_id"],

"properties": {

"request_id": { "type": "string" },

"trace_id": { "type": "string" }

}

}

}

}Tip: Treat this schema like an API contract. If a service can’t emit it, the service isn’t “incident-ready.”

Pattern 2) Make request correlation non-negotiable (request_id everywhere)

Forensics-ready microservices require a single correlation handle that survives every hop.

Node.js (Express) middleware: request_id + structured logs

import crypto from "crypto";

export function correlation(req, res, next) {

const incoming = req.header("x-request-id");

const requestId = incoming || `req_${crypto.randomUUID()}`;

req.requestId = requestId;

res.setHeader("x-request-id", requestId);

// Optional: attach actor hints if available (never trust blindly)

req.actor = {

ip: req.ip,

ua: req.get("user-agent"),

};

next();

}Structured logger helper (JSON)

export function auditLog(event) {

// Output JSON only (easy to parse, ship, and query)

process.stdout.write(JSON.stringify(event) + "\n");

}Usage in an endpoint

app.get("/v1/orders/export", async (req, res) => {

// ... authz checks ...

auditLog({

schema: "audit.v1",

ts: new Date().toISOString(),

service: "orders-api",

env: process.env.NODE_ENV,

event: "order.export",

result: "success",

actor: { type: "user", id: req.user.id, role: req.user.role, ip: req.actor.ip, ua: req.actor.ua, mfa: !!req.user.mfa },

target: { type: "order", id: "bulk", tenant_id: req.user.tenantId },

request: { request_id: req.requestId, method: req.method, path: req.path, status: 200 },

labels: { build_sha: process.env.BUILD_SHA, release: process.env.RELEASE }

});

res.json({ ok: true, request_id: req.requestId });

});Pattern 3) Propagate trace context (so you can follow the blast radius)

Correlation is good. Distributed tracing is better—because it shows dependency chains.

HTTP: propagate traceparent and x-request-id

import fetch from "node-fetch";

export async function callBilling(req, payload) {

const headers = {

"content-type": "application/json",

"x-request-id": req.requestId,

};

const tp = req.header("traceparent");

if (tp) headers["traceparent"] = tp;

return fetch(process.env.BILLING_URL + "/v1/charge", {

method: "POST",

headers,

body: JSON.stringify(payload),

});

}gRPC (example): pass correlation via metadata

import { Metadata } from "@grpc/grpc-js";

export function grpcMeta(req) {

const md = new Metadata();

md.set("x-request-id", req.requestId);

const tp = req.header("traceparent");

if (tp) md.set("traceparent", tp);

return md;

}Add security tags to spans (investigation-friendly traces)

// Pseudocode: attach attributes to spans

span.setAttribute("enduser.id", req.user.id);

span.setAttribute("tenant.id", req.user.tenantId);

span.setAttribute("auth.method", req.user.authMethod);

span.setAttribute("security.event", "order.export");Why it matters: during an incident, you want to see “request entered gateway → orders-api → billing → storage” in seconds.

Pattern 4) Build an append-only audit trail (logs aren’t enough)

Logs can be dropped, sampled, or delayed. For high-value actions, write audit events into an append-only datastore.

Postgres: audit table (append-only)

CREATE TABLE audit_events (

id BIGSERIAL PRIMARY KEY,

ts TIMESTAMPTZ NOT NULL DEFAULT now(),

schema TEXT NOT NULL,

service TEXT NOT NULL,

event TEXT NOT NULL,

result TEXT NOT NULL,

tenant_id TEXT,

actor_id TEXT,

actor_type TEXT,

request_id TEXT NOT NULL,

trace_id TEXT,

payload JSONB NOT NULL

);

-- Prevent UPDATE/DELETE (append-only)

CREATE OR REPLACE FUNCTION deny_mutations() RETURNS trigger AS $$

BEGIN

RAISE EXCEPTION 'audit_events is append-only';

END;

$$ LANGUAGE plpgsql;

CREATE TRIGGER audit_events_no_update

BEFORE UPDATE OR DELETE ON audit_events

FOR EACH ROW EXECUTE FUNCTION deny_mutations();Transactionally write audit events (so evidence matches reality)

BEGIN;

-- business change

UPDATE orders SET exported_at = now() WHERE tenant_id = $1;

-- audit event (same transaction)

INSERT INTO audit_events(schema, service, event, result, tenant_id, actor_id, actor_type, request_id, trace_id, payload)

VALUES (

'audit.v1', 'orders-api', 'order.export', 'success', $1, $2, 'user', $3, $4, $5::jsonb

);

COMMIT;Pattern 5) Add tamper resistance (so logs can be trusted)

“Immutable” usually means hard-to-change and easy-to-detect changes.

Hash chaining audit events (simple, effective)

import hashlib, json

def chain_hash(prev_hash: str, event: dict) -> str:

body = json.dumps(event, sort_keys=True, separators=(",", ":")).encode()

return hashlib.sha256(prev_hash.encode() + body).hexdigest()

# Store prev_hash + this_hash with each event (or per time window)Object-lock retention for log archives (Terraform example)

resource "aws_s3_bucket" "audit_archive" {

bucket = "prod-audit-archive"

}

resource "aws_s3_bucket_object_lock_configuration" "lock" {

bucket = aws_s3_bucket.audit_archive.id

rule {

default_retention {

mode = "COMPLIANCE"

days = 90

}

}

}Even if you don’t use this exact stack, the principle holds: make evidence durable.

Pattern 6) CI/CD provenance: capture “what shipped” as evidence

Forensics-ready microservices don’t stop at runtime telemetry. Investigations also need build identity:

- commit SHA

- build number

- SBOM / dependency snapshot

- signed artifacts (optional but powerful)

- deploy metadata (what cluster/namespace, when, by which pipeline)

GitHub Actions: retain evidence bundle

name: build-and-evidence

on: [push]

jobs:

build:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

- name: Set build metadata

run: |

echo "BUILD_SHA=$(git rev-parse --short HEAD)" >> $GITHUB_ENV

echo "RELEASE=orders@$(date +%Y.%m.%d)-${GITHUB_RUN_NUMBER}" >> $GITHUB_ENV

- name: Build

run: |

docker build -t orders:${{ env.BUILD_SHA }} .

- name: Generate SBOM (example)

run: |

mkdir -p evidence

echo "{\"release\":\"${{ env.RELEASE }}\",\"sha\":\"${{ env.BUILD_SHA }}\"}" > evidence/build.json

# Replace with your SBOM tool of choice

echo "sbom-placeholder" > evidence/sbom.txt

- name: Upload evidence bundle

uses: actions/upload-artifact@v4

with:

name: evidence-${{ env.RELEASE }}

path: evidence/

retention-days: 90Deploy manifest: stamp build identity (so telemetry can link to “what ran”)

apiVersion: apps/v1

kind: Deployment

metadata:

name: orders-api

spec:

template:

metadata:

labels:

app: orders-api

release: "[email protected]"

build_sha: "9c1a2f1"

spec:

containers:

- name: orders-api

image: orders:9c1a2f1

env:

- name: RELEASE

value: "[email protected]"

- name: BUILD_SHA

value: "9c1a2f1"Pattern 7) Hardening that makes investigations faster (not louder)

Some “security best practices” help prevention, but not investigation. Forensics-ready microservices prioritize both.

Practical hardening moves that help DFIR

- Service identity (mTLS or signed service tokens) so you can attribute service-to-service calls.

- Least privilege per service so you can scope blast radius quickly.

- Secrets hygiene + rotation evidence so you can prove what changed after containment.

- High-value event coverage (exports, admin actions, role changes, auth failures, token refresh, key creation).

- Redaction by default so you don’t turn logging off during the next incident.

Python redaction example (safe logging)

SENSITIVE_KEYS = {"password", "token", "secret", "authorization", "api_key"}

def redact(obj):

if isinstance(obj, dict):

return {k: ("[REDACTED]" if k.lower() in SENSITIVE_KEYS else redact(v)) for k, v in obj.items()}

if isinstance(obj, list):

return [redact(x) for x in obj]

return objA “real-world” blueprint: telemetry pipeline for forensics-ready microservices

Use a simple mental model:

App logs (structured JSON) + audit events (append-only) + traces (context propagation) + CI/CD evidence (provenance)

→ shipped into a central place with retention + access controls.

Fluent Bit example: ship JSON logs reliably

[INPUT]

Name tail

Path /var/log/containers/*.log

Parser json

Tag kube.*

[FILTER]

Name kubernetes

Match kube.*

Merge_Log On

[OUTPUT]

Name http

Match *

Host log-collector.internal

Port 8080

URI /ingest

Format jsonInvestigation queries you’ll actually use

Find suspicious actor activity across services (by request_id)

# Example: local export for triage

cat logs.jsonl | jq -c 'select(.request.request_id=="req_01HR9K9YQ1K8PZ1WJ1Q8N2V7A1")'Filter for high-value events only

cat logs.jsonl | jq -c 'select(.schema=="audit.v1" and (.event|test("export|admin|role|token|login")))'Where the free scanner fits (fast signal before deeper work)

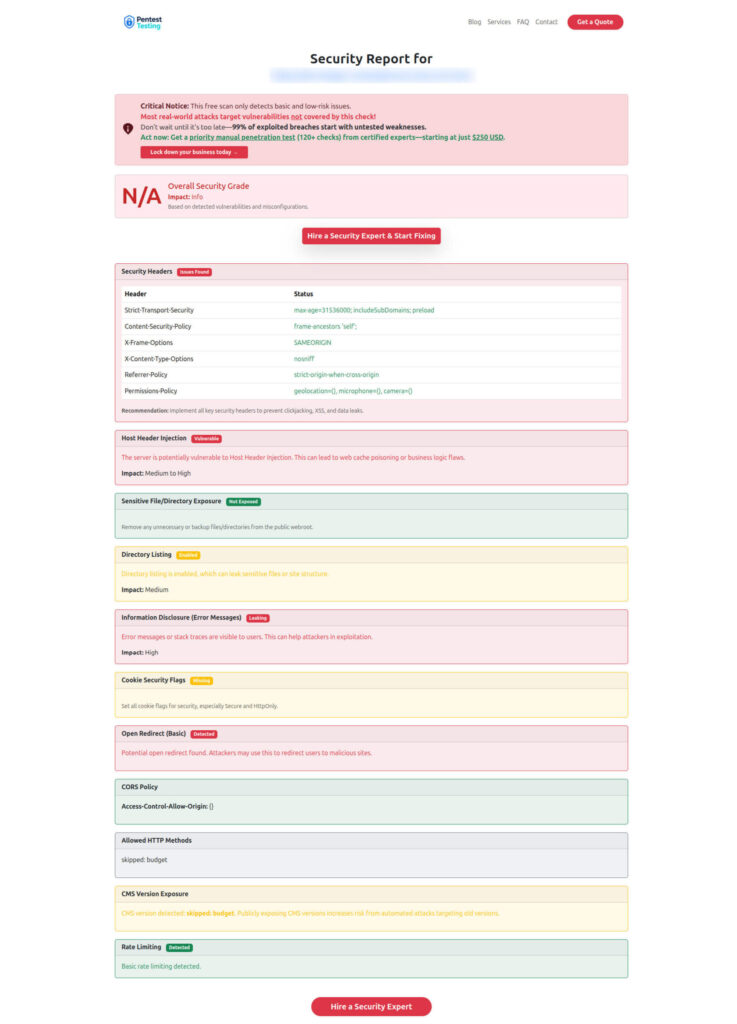

If you want a quick, practical starting point for risk discovery (especially for web apps and APIs), use our free tool:

Website Vulnerability Scanner: https://free.pentesttesting.com/

Our Free Website Vulnerability Scanner tool Dashboard

Sample report screenshot to check Website Vulnerability

Need help making your stack forensics-ready?

If you want an expert-led path—from risk discovery to remediation and incident-grade evidence—these services are a strong next step:

- Risk Assessment Services: https://www.pentesttesting.com/risk-assessment-services/

- Remediation Services: https://www.pentesttesting.com/remediation-services/

- Digital Forensic Analysis Services: https://www.pentesttesting.com/digital-forensic-analysis-services/

Related Cyber Rely reading (implementation-heavy)

If you’re building forensics-ready microservices, these companion guides go deeper on the building blocks:

- Forensics-Ready APIs for Microservices: https://www.cybersrely.com/forensics-ready-apis-microservices-observability/

- Forensics-Ready SaaS Logging Patterns: https://www.cybersrely.com/forensics-ready-saas-logging-audit-trails/

- Forensics-Ready CI/CD Pipeline Steps: https://www.cybersrely.com/forensics-ready-ci-cd-pipeline-steps/

- Supply-Chain CI Hardening Wins (2026): https://www.cybersrely.com/supply-chain-ci-hardening-2026/

- Security Chaos Experiments for CI/CD: https://www.cybersrely.com/security-chaos-experiments-for-ci-cd/

🔐 Frequently Asked Questions (FAQs)

Find answers to commonly asked questions about Forensics-Ready Microservices.