9 Powerful Forensics-Ready APIs for Microservices

If you’ve ever tried to investigate a production incident across microservices, you know the pain: logs split across services, missing request IDs, inconsistent schemas, and “helpful” debug statements that omit the one thing you need—who did what, when, from where, and how it spread.

That’s why forensics-ready APIs are becoming a real engineering requirement—not a compliance checkbox.

Forensics-ready APIs and forensics-ready microservices are designed to make investigations fast, reliable, and low-drama:

- You can rebuild a timeline without guesswork

- You can prove impact boundaries (what was accessed/changed)

- You can correlate identity, session, and service-to-service hops

- You can ship features without turning observability into a bottleneck

This guide gives practical patterns + copy/paste-heavy code to help engineering leaders build forensics-ready APIs with microservices observability that scales.

1) Start With a Canonical Audit Event (Schema v1)

Most teams lose time during incidents because every service logs different shapes. Fix that by defining a canonical audit event schema for forensics-ready APIs and enforcing it.

Canonical event example (JSON)

{

"schema_version": "1.0",

"ts": "2026-01-27T10:15:00.123Z",

"env": "prod",

"service": "orders-api",

"event": "order.updated",

"severity": "info",

"request_id": "req_01HT9P9K4V...",

"trace_id": "4bf92f3577b34da6a3ce929d0e0e4736",

"span_id": "00f067aa0ba902b7",

"actor": { "type": "user", "id": "user_123", "org_id": "org_77", "role": "admin" },

"auth": { "method": "passkey", "mfa": true, "session_id": "sess_abc" },

"source": { "ip": "203.0.113.10", "user_agent": "Mozilla/5.0", "device_id": "dev_xyz" },

"target": { "resource_type": "order", "resource_id": "ord_9001" },

"change": { "fields": ["status"], "before": { "status": "pending" }, "after": { "status": "shipped" } },

"result": "success",

"latency_ms": 27

}Minimal JSON Schema snippet (versioned)

{

"$id": "audit-event.schema.v1.json",

"type": "object",

"required": ["schema_version","ts","env","service","event","result"],

"properties": {

"schema_version": { "type": "string" },

"ts": { "type": "string" },

"env": { "type": "string" },

"service": { "type": "string" },

"event": { "type": "string" },

"request_id": { "type": "string" },

"trace_id": { "type": "string" },

"actor": { "type": "object" },

"source": { "type": "object" },

"target": { "type": "object" },

"result": { "type": "string", "enum": ["success","fail"] }

}

}Rule: every service emitting security-relevant events uses the same schema, and increments schema_version when you make breaking changes.

2) Make Request Correlation Non-Negotiable (Request ID + Trace Context)

Without correlation, incident timelines collapse. For forensics-ready APIs, you need:

x-request-idpropagation edge → edgetraceparentpropagation service → service- consistent log fields so queries work across all services

Node.js (Express) middleware: request_id + actor + structured logs

import crypto from "crypto";

import pino from "pino";

import pinoHttp from "pino-http";

const logger = pino({

level: process.env.LOG_LEVEL || "info",

redact: {

paths: [

"req.headers.authorization",

"req.headers.cookie",

"res.headers['set-cookie']",

"*.token",

"*.password",

"*.secret"

],

remove: true

}

});

function getRequestId(req) {

return req.headers["x-request-id"] || `req_${crypto.randomUUID()}`;

}

function getActor(req) {

// set req.user from your auth middleware after verifying token/session

const u = req.user;

if (!u) return { type: "anonymous" };

return { type: "user", id: u.id, org_id: u.orgId, role: u.role };

}

export const httpLogger = pinoHttp({

logger,

genReqId: (req) => getRequestId(req),

customProps: (req) => ({

actor: getActor(req),

source: {

ip: (req.headers["x-forwarded-for"] || "").split(",")[0].trim() || req.socket.remoteAddress,

user_agent: req.headers["user-agent"],

device_id: req.headers["x-device-id"]

},

trace: {

traceparent: req.headers["traceparent"]

}

}),

customSuccessMessage: () => "request.completed",

customErrorMessage: () => "request.failed"

});Usage:

import express from "express";

import { httpLogger } from "./httpLogger.js";

const app = express();

app.use(httpLogger);

app.get("/v1/orders/:id", async (req, res) => {

req.log.info({ event: "order.read", target: { resource_type: "order", resource_id: req.params.id } });

res.json({ ok: true });

});

app.listen(3000);Now your forensics-ready APIs emit consistent identity + source + correlation fields on every request.

3) Tag Spans Like a Security Team (Tracing That Helps Investigations)

Distributed tracing becomes “security observability” when you add investigation-grade tags:

- actor type/id/org

- auth method + session id

- target resource type/id

- result (success/fail) + reason codes

Node.js: attach security attributes to spans (pattern)

// Pseudocode pattern: apply attributes wherever your tracing hooks live

function applySecuritySpanAttrs(span, { actor, target, auth, requestId }) {

if (!span) return;

span.setAttribute("sec.request_id", requestId || "");

span.setAttribute("sec.actor.type", actor?.type || "unknown");

span.setAttribute("sec.actor.id", actor?.id || "");

span.setAttribute("sec.actor.org_id", actor?.org_id || "");

span.setAttribute("sec.auth.method", auth?.method || "");

span.setAttribute("sec.auth.session_id", auth?.session_id || "");

span.setAttribute("sec.target.type", target?.resource_type || "");

span.setAttribute("sec.target.id", target?.resource_id || "");

}Why this matters: in a real breach investigation, “slow trace” isn’t the question—“which identity touched which object across which services?” is.

4) Build an Immutable Audit Trail (Append-Only by Design)

Debug logs are for developers. Audit trails are for incident response, customer trust, and compliance evidence. For forensics-ready APIs, store a dedicated audit stream that’s:

- append-only

- queryable

- tamper-evident (at least)

Postgres: practical append-only audit table

CREATE TABLE audit_events (

id TEXT PRIMARY KEY,

ts TIMESTAMPTZ NOT NULL DEFAULT now(),

env TEXT NOT NULL,

service TEXT NOT NULL,

schema_version TEXT NOT NULL,

event TEXT NOT NULL,

request_id TEXT,

trace_id TEXT,

actor_type TEXT NOT NULL,

actor_id TEXT,

org_id TEXT,

source_ip TEXT,

user_agent TEXT,

device_id TEXT,

resource_type TEXT,

resource_id TEXT,

result TEXT NOT NULL,

metadata JSONB NOT NULL DEFAULT '{}'::jsonb,

-- tamper-evident chain fields

prev_hash TEXT,

row_hash TEXT

);

-- Recommendation: application role should not have UPDATE/DELETE privileges.Simple tamper-evident hash chaining (application-side)

import crypto from "crypto";

function sha256(s) {

return crypto.createHash("sha256").update(s).digest("hex");

}

export function computeRowHash(row, prevHash) {

// Keep this stable (same field order forever)

const payload = JSON.stringify({

id: row.id,

ts: row.ts,

env: row.env,

service: row.service,

schema_version: row.schema_version,

event: row.event,

request_id: row.request_id,

trace_id: row.trace_id,

actor_type: row.actor_type,

actor_id: row.actor_id,

org_id: row.org_id,

source_ip: row.source_ip,

resource_type: row.resource_type,

resource_id: row.resource_id,

result: row.result,

metadata: row.metadata

});

return sha256(`${prevHash || ""}.${payload}`);

}Outcome: if someone attempts to alter historical audit rows, the chain breaks.

5) Treat “High-Value Events” as First-Class Signals

Attackers love events your logs often ignore. In forensics-ready APIs, explicitly log:

- login success/failure + auth changes

- MFA enrollment + recovery changes

- API key creation/rotation

- OAuth consent / token grants

- role/permission changes

- exports / bulk downloads

- webhook destination changes

- admin actions + impersonation

- data deletion + retention changes

Example: log export events with strong forensic context

req.log.info({

event: "data.export",

target: { resource_type: "report", resource_id: reportId },

metadata: {

export_format: "csv",

row_count: 18422,

filters: { date_from, date_to },

destination: "download"

},

result: "success"

}, "audit.security_event");This makes investigations dramatically faster because your “big moments” are searchable.

6) Add a Security Telemetry Bridge (SIEM/EDR-Friendly)

A scalable approach is a small “telemetry bridge” microservice:

- consumes audit stream (DB, queue, or log pipeline)

- transforms to your SIEM schema

- signs payloads (integrity)

- forwards reliably with backoff + dead-letter

HMAC-signed forwarding (Node.js example)

import crypto from "crypto";

function sign(body, secret) {

return crypto.createHmac("sha256", secret).update(body).digest("hex");

}

export async function forwardToCollector(event, { endpoint, secret }) {

const body = JSON.stringify(event);

const sig = sign(body, secret);

const res = await fetch(endpoint, {

method: "POST",

headers: {

"content-type": "application/json",

"x-signature": sig

},

body

});

if (!res.ok) {

throw new Error(`collector_forward_failed status=${res.status}`);

}

}Collector-side verification (Python example)

import hmac, hashlib, json

from flask import Flask, request, abort

app = Flask(__name__)

SECRET = b"replace_me"

def valid_sig(raw: bytes, sig: str) -> bool:

expected = hmac.new(SECRET, raw, hashlib.sha256).hexdigest()

return hmac.compare_digest(expected, sig)

@app.post("/ingest")

def ingest():

raw = request.data

sig = request.headers.get("x-signature", "")

if not valid_sig(raw, sig):

abort(401)

event = json.loads(raw.decode("utf-8"))

# Send to your SIEM/EDR pipeline here

return {"ok": True}This pattern keeps your microservices observability clean while still enabling enterprise-grade security monitoring.

7) CI/CD “Forensic Readiness” Gates (Tests That Prevent Regressions)

If forensic readiness isn’t tested, it decays. Add CI checks that fail builds when forensics-ready APIs stop producing the required fields.

Jest: assert logs include core fields (baseline contract)

test("audit log includes request_id + actor + target", async () => {

const res = await fetch("http://localhost:3000/v1/orders/ord_1", {

headers: { "x-request-id": "req_test_1", "x-device-id": "dev_test" }

});

expect(res.ok).toBe(true);

// Example: your test logger writes structured logs to a buffer you can inspect

const logLine = global.__LOG_BUFFER__.find(l => l.event === "order.read");

expect(logLine.request_id).toBe("req_test_1");

expect(logLine.actor).toBeDefined();

expect(logLine.target.resource_type).toBe("order");

});Postgres integration test: audit row exists (append-only)

test("writes an append-only audit event row", async () => {

const { rows } = await db.query(

"SELECT * FROM audit_events WHERE request_id = $1 ORDER BY ts DESC LIMIT 1",

["req_test_1"]

);

expect(rows.length).toBe(1);

expect(rows[0].event).toBe("order.read");

expect(rows[0].result).toBe("success");

});Regression detection idea (simple, effective)

Keep a “golden set” of required audit events per critical endpoint (auth, admin, export). If a PR changes behavior, your forensic contract tests catch it before production.

8) Turn Pentest Findings Into Engineering Controls (Not Just Tickets)

Penetration tests often reveal repeat patterns: missing authz checks (IDOR), weak audit trails, overly-trusting internal calls. Convert those into:

- reusable middleware

- policy checks

- tests + gates

- mandatory audit events

Example: enforce authorization + always emit audit event (IDOR hardening)

export async function requireOrgAccess(req, res, next) {

const { orgId } = req.user || {};

const { id: orderId } = req.params;

const order = await loadOrder(orderId);

if (!order) return res.status(404).json({ error: "not_found" });

if (order.org_id !== orgId) {

req.log.warn({

event: "authz.denied",

target: { resource_type: "order", resource_id: orderId },

result: "fail",

metadata: { reason: "org_mismatch" }

}, "access_denied");

return res.status(403).json({ error: "forbidden" });

}

req.order = order;

next();

}Now your forensics-ready APIs don’t just block abuse—they leave a clean forensic trail of attempted abuse.

9) Keep Volume Scalable (Retention, Sampling, and “Investigation Tiers”)

Scaling microservices observability is mostly a data strategy problem:

- don’t “log everything forever”

- do store high-value audit events longer

- sample noisy debug logs but never sample security-relevant events

Practical tiering approach:

- Tier A (Audit Events): auth/admin/exports/permission changes → long retention

- Tier B (Request Logs): structured request completion logs → medium retention

- Tier C (Debug): short retention + sampling in prod

This keeps costs controlled while preserving what investigators actually need.

Investigation Checklist: Questions Your Forensics-Ready APIs Must Answer

During an incident, you should be able to answer these quickly:

- Which actor identities accessed the affected resource(s)?

- From which IPs/devices/sessions did access occur?

- Which endpoints and services were involved (trace)?

- What changed (before/after) and when?

- Did permissions, tokens, or OAuth grants change?

- What was exported, downloaded, or bulk-read?

- Was there lateral movement across services?

- What failed authz checks occurred (blocked attempts)?

- What was the first suspicious event in the timeline?

- What’s the blast radius by org/user/resource?

Example query: “show me everything for one request_id”

SELECT ts, service, event, actor_id, org_id, resource_type, resource_id, result, metadata

FROM audit_events

WHERE request_id = 'req_01HT9P9K4V...'

ORDER BY ts ASC;

Use the following text as captions/intro paragraphs where you insert screenshotsFree Website Vulnerability Scanner (tool page)

- Free tool: https://free.pentesttesting.com/

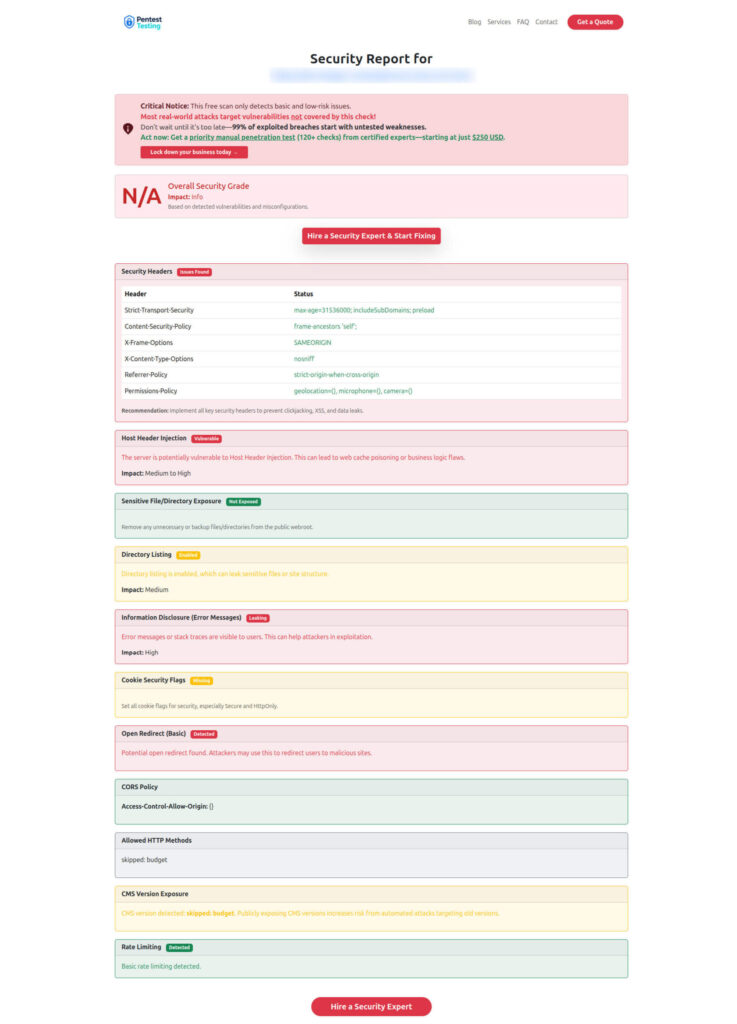

Sample Report to check Website Vulnerability (from the tool)

Need Help Implementing This in Production?

If you want hands-on help turning these patterns into shipping code and measurable security outcomes:

- Risk Assessment Services: https://www.pentesttesting.com/risk-assessment-services/

- Remediation Services: https://www.pentesttesting.com/remediation-services/

- Digital Forensic Analysis Services (DFIR): https://www.pentesttesting.com/digital-forensic-analysis-services/

These services pair well with forensics-ready APIs because they focus on: identifying real gaps, prioritizing fixes, and validating outcomes with evidence you can trust.

Recommended Recent Reads

On Cyber Rely (more engineering-first, code-heavy posts)

- Forensics-ready logging deep dive: https://www.cybersrely.com/forensics-ready-saas-logging-audit-trails/

- Supply-chain CI hardening: https://www.cybersrely.com/supply-chain-ci-hardening-2026/

- KEV workflow for engineers: https://www.cybersrely.com/cisa-kev-engineering-workflow-exploited/

- Passkeys + token binding patterns: https://www.cybersrely.com/passkeys-token-binding-stop-session-replay/

🔐 Frequently Asked Questions (FAQs)

Find answers to commonly asked questions about Forensics-Ready APIs & Microservices Observability.